Backstage Software Template Repository

Solving a need that was discussed on Hacker News to put as many Software Templates for Backstage into a single place for other teams to use

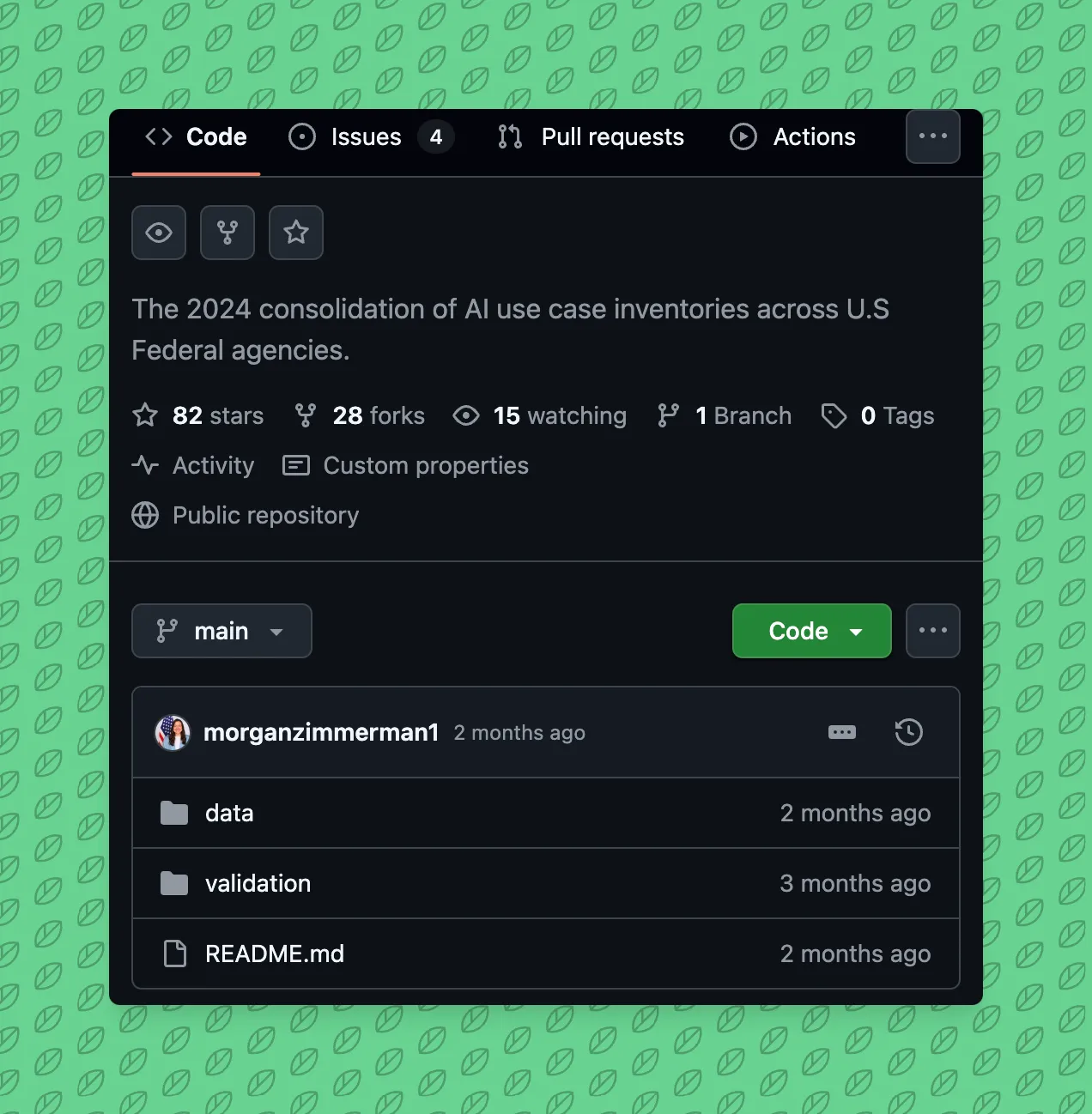

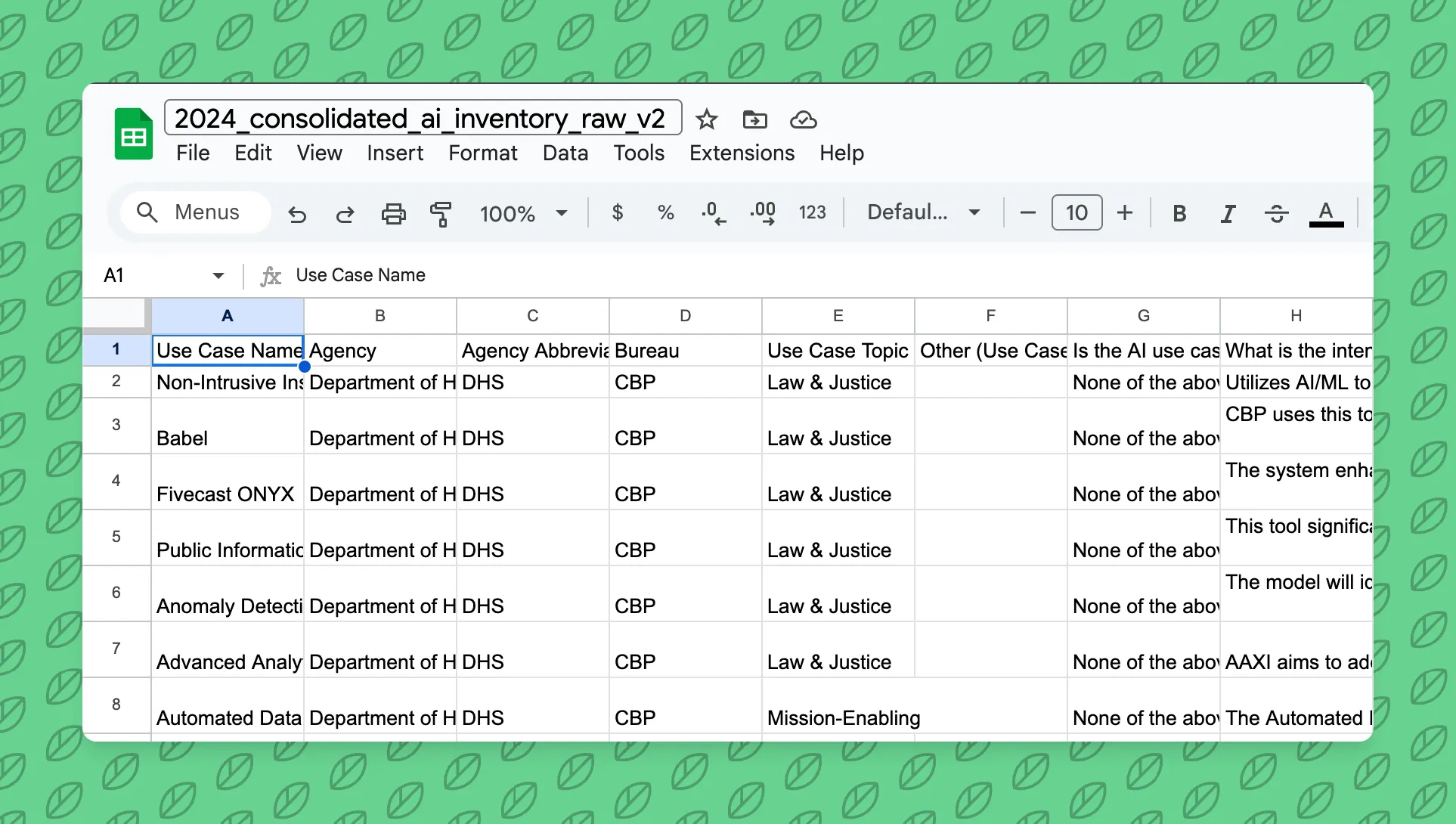

The White House recently released the 2024 Federal AI Use Cases Inventory, and it’s massive - 2,133 AI use cases across 41 federal agencies. According to reporting from FedScoop, this represents a significant increase from last year’s inventory, though exact previous numbers are a bit murky.

Important context: This inventory was completed under Executive Order 14110 on Safe, Secure, and Trustworthy AI, which was rescinded on January 20, 2025. The regulatory landscape around federal AI is currently in flux, but this inventory still provides valuable insights into how agencies were approaching AI adoption.

Having spent significant time in government tech, I was curious to dig into this data and see what’s actually happening with AI adoption across federal agencies. As someone who has worked on government design systems and tracked federal website performance, I was particularly interested in exploring how agencies are approaching AI adoption.

Let’s start with some high-level findings that jumped out at me:

What’s most interesting to me is which agencies are investing heavily in “rights-impacting” or “safety-impacting” AI applications. The inventory shows 351 such use cases, with the VA accounting for 145 of them and the DOJ reporting 124. That makes sense given these agencies’ missions directly affect benefits, law enforcement, and health services.

The top three categories of AI uses reported were:

This tracks with what I’ve seen in government - the first wave of AI adoption often focuses on internal productivity and operational improvements before moving to citizen-facing applications.

Something important to note: not all these AI use cases are currently active. The inventory includes use cases at various stages:

In my experience working on government tech initiatives, there’s often a significant gap between planning a technology implementation and having it fully operational. I’d be curious to know exactly how many of these 2,133 use cases are actually in production today.

One of the most revealing aspects of the inventory is that OMB granted extensions to 206 use cases that couldn’t meet the December 1, 2024 deadline for implementing required risk management practices.

The most common areas where agencies needed extensions were:

This doesn’t surprise me at all. In government, the governance and risk management frameworks often lag behind the technology implementation. It’s encouraging to see OMB putting emphasis on these risk management practices, but the extensions show there’s still work to be done.

While I haven’t had time to dive into all 2,133 use cases (that would make for a very long blog post), a few interesting examples caught my eye:

DHS has disclosed a new internal agency chatbot called DHSChat. As government agencies struggle with knowledge management and information sharing across silos, internal chatbots could be a game-changer. I’d be interested to see how DHS is handling security and data governance with this tool.

The VA’s high number of rights-impacting AI use cases (145) suggests they’re applying AI extensively in healthcare and benefits contexts. Given the VA’s massive healthcare system, there’s enormous potential for AI to improve care quality and efficiency - but also significant risks that need careful management.

The inventory, while comprehensive, doesn’t include everything:

There’s also the matter of agencies that haven’t yet submitted their inventories. The data shows that while 41 agencies submitted, some notable ones like the Small Business Administration were still finalizing their submissions.

The substantial increase in reported AI use cases (from 710 to 2,133 in one year) shows that government AI adoption is accelerating rapidly. However, looking at the details suggests we’re still in the early-to-middle stages of the adoption curve.

Many use cases focus on productivity and internal operations rather than transformative citizen services. And the extensions granted for risk management implementation suggest that agencies are still developing the governance frameworks needed for responsible AI use.

Having worked on government technology initiatives, I know firsthand that change happens slowly - often for good reasons. The deliberate pace allows for proper risk assessment, but it can be frustrating when you see the potential for technology to improve services.

As this inventory continues to evolve, I’d love to see:

The 2024 Federal AI Use Cases Inventory represents significant progress in transparency around government AI adoption. The sheer volume of use cases shows that agencies are actively exploring AI’s potential, while the attention to rights and safety impact suggests they’re (mostly) doing so responsibly.

That said, we’re clearly still in the early chapters of the government AI story. Many of these use cases are still in development, and agencies are working to build the governance frameworks needed for responsible implementation.

With the rescinding of Executive Order 14110 in January 2025, there’s now some uncertainty about the regulatory framework that will guide federal AI adoption going forward. However, the inventory still provides valuable insights into the types of AI applications agencies are pursuing and the challenges they’re facing.

As someone who cares deeply about both technology innovation and responsible governance, I’m cautiously optimistic about what I’m seeing. The inventory shows momentum, but also a thoughtful approach to adoption that recognizes AI’s unique risks. I’m also keenly interested in how the new administration’s policies will shape federal AI implementation in the coming years.

If you’re interested in AI implementation, you might want to check out my posts on practical AI applications in personal projects or my guide to getting AWS AI certified. And if you’re working on government AI initiatives and want to chat more about this space, reach out to me. I’d love to hear about your experiences.